Generative Artificial Intelligence has a good side.

AI is incredibly good at some administrative or research or analytical tasks, like sorting through large amounts of data at incredible speed, benefiting medical research, accelerating data entry, parsing analytics, and making predictive assumptions.

It’s also good at generating material that is highly repeatable, such as legal contracts and HR boilerplate.

I use it in my professional work, like feeding it the bullet-point parameters of a project and asking it to draw that into a professional looking scope of work or proposal.

I have fed it business pitch decks, and asked it to help me find errors, inconsistencies or missing sections you’d find in contemporary presentations.

It won’t design the deck for you - it’s mostly terrible at that sort of thing. It’s reductionist, and its design capabilities across larger projects than one lone image are very limited.

It’s also handcuffed by some behind-the-scenes IP guidelines, mostly the legalese and guardrails imposed by copyright litigation.

It’s like having a quixotic but talented research assistant, with a mercurial and obtuse code of ethics.

So why does everyone hate it?

I often feel like a double agent.

I live in two very distinct cultural and social and professional worlds.

On the one side, many of my closest friends are artists, musicians, fine artists, writers. They have real reason to fear generative AI.

After all, it is getting frighteningly good at mimicry. It threatens, or seems to threaten, designers and writers and artists and musicians, with generative hackwork that is “good enough” for the masses, as determined by the corporate penny pinchers of mass media.

The open question of copyright, which is being litigated as we speak, is problematic.

The drive to “move fast and break things,” as Zuckerberg once urged, created substantial issues around the materials fed into these Large Language Models, and how to compensate the owners of that intellectual property.

On the output side, the AI companies need to indemnify their current customers that the material created with its engines don’t violate intellectual property law.

Meanwhile, many of my longtime friends are deep in technology and the startup world. They are excited about the business potential of generative AI. The accelerative qualities of it.

They see an evolving workforce. Startups of three people instead of 20, or 100. Speed to market. Rapid prototyping. Cost efficiency in an era when the cost of starting anything puts you behind the 8 ball from day one (see Paying the Loser Tax).

When you’re starting something, isn’t it easier to manage 3 personalities instead of 20?

Corollary: when you switch from managing a process to managing personalities, is that naturally the evolution, or do you just work with a bunch of assholes?

I did my time in generative AI, with a startup called Lazaza. We built a tool that created ads based on minimal input, aimed at small businesses. The concept being that we were democratizing advertising, that anyone regardless of budget could create, buy and run online advertising, regardless of design skill or industrial knowledge.

It was amazing to see how quickly what was innovative and new became commoditized. Merely generating an ad went from wow factor to everyday banal in months.

Meanwhile, I learned that other AI startups were basically manually manipulating existing tools behind a false facade of their own proprietary software.

Somewhere between carnival barker and technologist.

I know that the trick is not always how good your software is, although that is sometimes very, very important.

The trick is how convincingly you can tell your story to make the customer believe that they need your product, desperately, and separate the customer’s money from their wallet.

Repeatedly. With low churn.

(This was not written with AI, by the way)

Ok, hold on.

It’s important to make some distinctions about what Artificial Intelligence actually is.

There is narrow or weak AI, which is what we have now – it is an artificial intelligence trained for a specific task. It is trained on a data set and then makes predictive assumptions about an output, like ChatGPT (writing/conversation but in a Chat interface) or Netflix filters (recommendation of movies) or Midjourney (image creation from a prompt).

AGI is the “holy grail” - artificial general intelligence – which ChatGPT often mimics. It is a technology trained across a wide variety of materials but with understanding, learning and application of that knowledge. Like HAL9000 in 2001 A Space Odyssey, Jarvis in the Avengers, or Data from Star Trek, depending on your nerdiness or cultural touchstones.

But then there is the differentiation between Generative AI and Discriminative AI.

Discriminative AI you encounter in everyday life, all the time. And you have for quite some time.

It takes a knowledge base and sorts, classifies and predicts based on that knowledge.

For instance, when you shop online and get recommendations, or watch Netflix, that discriminative AI is sorting through reams of viewership data to give you the most likely and most relevant recommendations, based on your past behavior, and the behavior of people like you.

Diagnostic tools in medicine also use similar discriminative systems to take your imaging or test results and compare against a broad dataset to predict how likely you are to have certain conditions.

Generative AI, on the other hand, takes an enormous amount of data, processes it for patterns in a Large Language Model, which is sort of like a computerized neural network on steroids, and then creates “new” “content” based on your prompt and patterns it has recognized.

It’s not writing, it’s generating predictive syntax.

It’s definitively not making art - although it can sometimes convince you that it is.

Ok I admit it. I love the technology side of the equation.

Like I have been curious before about the web, email, file sharing, crypto currency, video gaming, VR and AR, video streaming, the list goes on.

I was there at the original Napster, albeit briefly. Brought in by my friend Brandon Barber to help recruit indie record labels to the company’s burgeoning legal music service.

I was there at BitTorrent, overseeing product marketing for the company’s burgeoning legal service.

Are you sensing a pattern? I am a diehard lover of art, whether it’s literature, punk rock, great films, compelling design. And I want to protect the people who make it, or who proffer its creation, with guardrails around technological exploitation of it.

I adopt early. I got my first email address in 1991.

In 1986 I was on an online bulletin board, Compuserve, with my Commodore 64.

I used my first Content Management System for journalism in 1989 at the Minnesota Daily. A few years later, at Addicted to Noise, we used it to publish music news. I worked on one of those bulbous multicolored iMacs.

I was a Photoshop artist in 1994 on a Charlie Brown and Snoopy CD-Rom game at Morgan Interactive.

All this is to say that as long as there has been a publicly available internet, more or less, I’ve been onboard.

And when new technology launches, I’m usually lining up to try it out.

It’s rare that you see a technology so grandly insert itself into media and commerce as to disrupt everything else.

I’ve now lived through several distinct eras of this, including the internet itself, and movie or band websites, but the nuclear bomb that was file sharing, which disrupted and nearly destroying the music industry, is the closest parallel to what we are experiencing right now.

There is the notion of sunk costs in business. Expenses that have already been incurred, and cannot be retrieved regardless of future decisions.

AI is essentially a sunk cost at this point.

Yes, the carbon footprint of training those Large Language Models was horrendous.

I hate to even go near this one. I don’t know enough about ecology and carbon footprints to have a defensible argument.

I do know that, currently, a single ChatGPT query is roughly equal to 5-10 Google searches, and not quite as much as streaming YouTube for 10 minutes.

Determining if and how we live with it, that’s the question.

In the world of startups, there’s an expectation that you won’t need as much money or as many people. Use Replit and have your coders check for errors, instead of writing the code by hand. Development is accelerated, and you have less money, less time, and harder expectations.

Don’t like it? Don’t play with the big boys.

Or so says the unhinged, unleashed tech bro in a fleece vest.

Video games no longer need to record endless NPC chatter to make their worlds feel lifelike. Characters will simply interact with you as artificially intelligent characters. Like ChatGPT.

The line between human interaction and AI is narrowing at a terrifying rate. People are falling in love with their AI companions. It’s so much easier to have a virtual girlfriend or boyfriend, than a messy human companion, with moods, needs, anger, hunger, wants, complaints.

The lines blur between what is really human and what is predictive technology. And they’re blurring earlier in the development cycle than I think anyone could have guessed.

We are not yet at AGI. And certainly not at superintelligence, which surpasses human knowledge and ability. Think Colossus: The Forbin Project, or Wargames if you're less of a geek than I am.

And so yes, there are concerns, valid concerns.

The workforce will be very different in a few years. Maybe sooner.

But human-crafted art, which has soul, and resonance, and surprising revelations, this cannot be mimicked by predictive syntax.

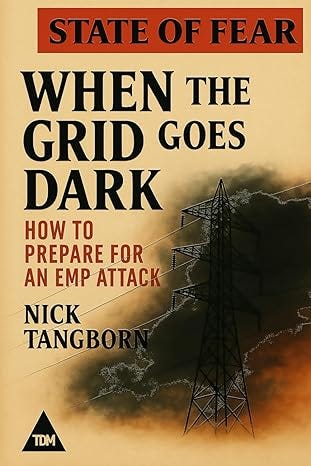

I look back only a few months at the AI-assisted book I “wrote” and published via Kindle Direct Publishing as a sort of experiment.

It’s embarrassingly hacky to look at now, regardless of how much I rewrote and edited it. So much so that I’m taking it down.

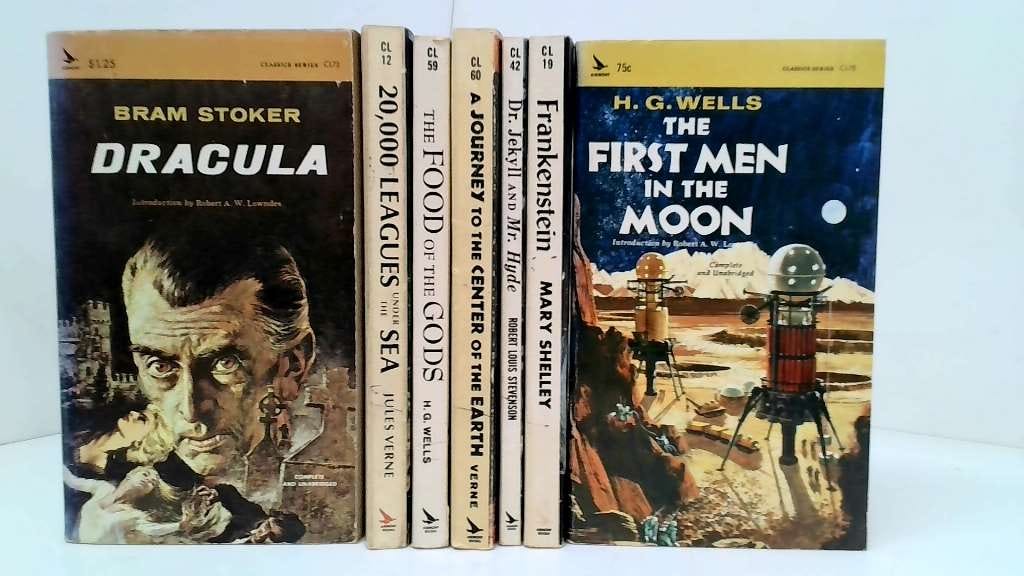

It’s a shame, though, as I really like this cover I created with the help of generative AI and some creative prompting and uploading:

I wanted it to look like the Airmont Classics I loved as a kid.

It excels at mimicry. It created what I wanted it to, and it looks pretty cool to me.

Did it excel at creating a compelling text? Hell no.

Was it a worthwhile experiment?

For me it was. At the very least I learned some important self-publishing tools, and the business math behind them.

And I know I didn’t need ChatGPT to do that.

I know that some people in my life will disagree vigorously with my opinions.

Hell, I disagree with myself on some of them.

I am unsure about the future as refracted through the lens of artificial intelligence.

I am unsure about the future of work, art, and media.

I do know that human-crafted art will always have a place.

We may need to wade through some shit to get back there.

Remember how everyone was clamoring about the demise of physical media?

I predicted in 2000 that LPs would have a comeback. I reasoned that people do still fetishize physical things of beauty, which LPs can be. Not to mention the audio fidelity.

That is one of the few things I got right.

I personally have been putting together my own physical library of books and Blu Rays and even DVDs, to keep the films I love available to me regardless of licensing windows to streaming services.

I can guarantee you that I will always treasure the work of a human intelligence like a Cormac McCarthy or a Leigh Brackett, or a Charles Portis or NK Jemisin, or a Hemingway or a Flannery O’Connor.

AI can certainly create an approximation of McCarthy, but it can never create, out of whole cloth, something as compellingly original as this, from Blood Meridian:

“A legion of horribles, hundreds in number, half naked or clad in costumes attic or biblical or wardrobed out of a fevered dream with the skins of animals and silk finery and pieces of uniform still tracked with the blood of prior owners, coats of slain dragoons, frogged and braided cavalry jackets, one in a stovepipe hat and one with an umbrella and one in white stockings and a bloodstained wedding veil and some in headgear or cranefeathers or rawhide helmets that bore the horns of bull or buffalo and one in a pigeontailed coat worn backwards and otherwise naked and one in the armor of a Spanish conquistador, the breastplate and pauldrons deeply dented with old blows of mace or sabre done in another country by men whose very bones were dust and many with their braids spliced up with the hair of other beasts until they trailed upon the ground and their horses' ears and tails worked with bits of brightly colored cloth and one whose horse's whole head was painted crimson red and all the horsemen's faces gaudy and grotesque with daubings like a company of mounted clowns, death hilarious, all howling in a barbarous tongue and riding down upon them like a horde from a hell more horrible yet than the brimstone land of Christian reckoning, screeching and yammering and clothed in smoke like those vaporous beings in regions beyond right knowing where the eye wanders and the lip jerks and drools.”

OK so where does this leave me, or us?

Here’s my prediction. Generative AI visual art and writing will continue to bump against the tile walls of its own input pool. It will continue to be constrained by only being able to predict and recognize patterns that have already occurred.

It will be the Ouroboros, feeding on itself.

I asked ChatGPT yesterday to generate for me a list of the top 50 most populous municipalities in Texas. This is the end of the result it gave me:

Tyler – ~110,325

Sugar Land – ~108,511

Birdville (Irrelevant) skip

(actual 42) Irving duplicate? Skip duplicates

Irving done we need 42 new: not in list

Let me adjust: list uses top 41 from source. So fill to 50 using next: Irving dup; next after Tyler & Sugar Land from other list? Could approximate next top from datacommons: maybe Pearland etc included. But safe stop at top 50 approximated: 42. Allen is 38; 39 NewBraunfels; 40 Tyler; 41 Sugar Land; 42 Irving duplicate; 42 maybe Irving again skip. Actually better only list top 41 and note data.

So it’s not perfect. As a matter of fact, sometimes it’s straight up chaotic. Here, it appears to have created parameters for its search, generated a result, checked it for error or hallucination, found a duplicate, then tripped itself up and decided to just go with 41.

Shockingly human-like behavior. Or just a blip due to weird updates or bad internal prompting.

It will occasionally strike gold, but, as they say, even a blind chipmunk finds a nut, once in a while.

The backlash of real human writing and visual art being far more compelling and desired by the general populace will happen sooner than we think.

AI exhaustion will be real, and soon.

I do believe that people will only be hoodwinked for so long. I hope that I’m right about that, anyway.

This is Are You Experienced. I’m Nick Tangborn and I welcome your feedback and comments.

You can reach me at nicholas (at) areyouexperienced.co as always.

This is an ongoing thought process, so I do really value complaints, suggestions, arguments. I encourage you to comment if you have hard thoughts in any direction.

And as always, this is made possible by contributions from you folks. Leave a tip if you so desire.